What $10 of GPU time buys you in 2025

We are in 2025. Compute is cheap. With \$2 you can rent a beefy GPU by the minute and nerd out all evening. This entertainment is cheaper than going to the movies (and depending who you ask, more fun). Let’s see how many discrete logarithms we can break on a \$10 budget…

The problem #

We want to solve discrete logarithm problem in an interval. Our group is an elliptic curve over a 256-bit prime field. The interval size is about 70 bits. This is a problem that has interesting applications (for example, when key generation didn’t use full entropy), but let’s talk about that another time.

The canonical algorithm to solve this is Pollard’s Kangaroo algorithm (extended to distributed search by van Oorschot and Wiener). This takes about $(2+o(1))\sqrt{N}$ group operations. This is an asymptotic measure; but here we want to get concrete and see the concrete cost of breaking (say) a 70-bit discrete log challenge in 2025 USD.

The code #

We take a GPU implementation for Pollard’s Kangaroo, with minimal modifications to make it work on different GPU architectures.

The platform #

We have the following GPUs available for rent:

| GPU name | $ / hour | Where hosted | Year | TFLOPS (FP32) | Compute Capability | VRAM | Memory Bandwidth |

|---|---|---|---|---|---|---|---|

| Titan Xp | $0.049/hr | Korea | 2017 | 11.7 | 6.1 (Pascal) | 12 GB | 547 GB/s |

| RTX PRO 6000 (Blackwell) | $0.676/hr | USA | 2025 | 119.0 | 9.0 (Blackwell) | 96 GB | 1.79 TB/s (≈ 1792 GB/s) |

| H200 (Hopper) | $2.800/hr | France | 2024 | 197.0 (est.) | 9.0 (Hopper) | 141 GB | 4.8 TB/s |

The machines are rented from vast.ai, a marketplace for GPU work. GPU owners can rent out GPU time and set a floor bid price. I don’t know much about vast.ai but the experience overall was great: top up $5 and connect 5 minutes later to random machines with big GPUs (some with a residential IP address, yay)

Results #

- How many 70-bit logarithms you can get with a $10 budget?

- Titan Xp: about 4500

- RTX PRO 6000: about 710. This is a bit surprising, but note that this is 10x more expensive than the Titan Xp.

- H200: TODO

- How many 64-bit logarithms you can get with $10 budget?

- Titan Xp: about 13428

Conclusions #

- For mid-size problems (around 64-bit problems), older and cheaper GPUs are great. They are pretty efficient and can be optimal for $ / computation (not $ / speed)

- For smaller problems you’re probably better off with just a CPU.

- A 50-bit problem takes just around 11.5s on an Intel E5-2680 v4 @ 2.40GHz. On a Titan Xp it takes about 17s (203 per hour, or about 41000 for \$10)

eye candy #

Example problem for 70-bit ECDLP on an interval:

Start : 0x8C33238D4C8F2F7FACD4FF3A5BCB73550C7045F3297110CEFE6DF0F00AF193D4

Stop : 0x8C33238D4C8F2F7FACD4FF3A5BCB73550C7045F32971110EFE6DF0F00AF193D3

[1] Priv: 0x8C33238D4C8F2F7FACD4FF3A5BCB73550C7045F3297111067279BA00590DBE69

Pub: 0364FBFDFF900733243009E571BC503762EC7A809B132D50F2558B2C431E6E4AE0

>>> bin(0x8C33238D4C8F2F7FACD4FF3A5BCB73550C7045F32971110EFE6DF0F00AF193D3 - 0x8C33238D4C8F2F7FACD4FF3A5BCB73550C7045F3297110CEFE6DF0F00AF193D4 + 1)

'0b10000000000000000000000000000000000000000000000000000000000000000000000'

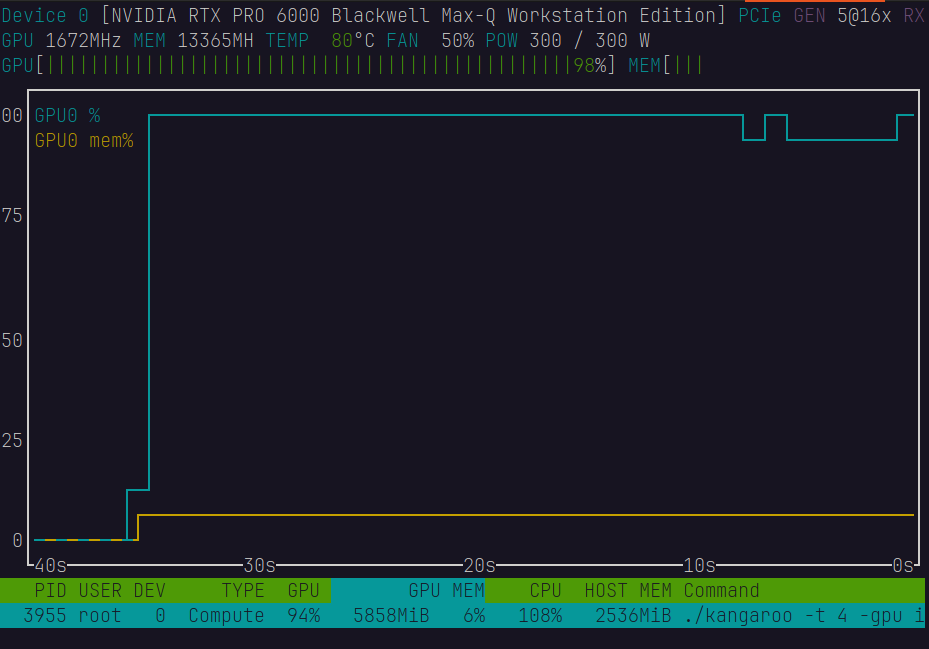

RTX PRO 6000 drawing 300 Watts:

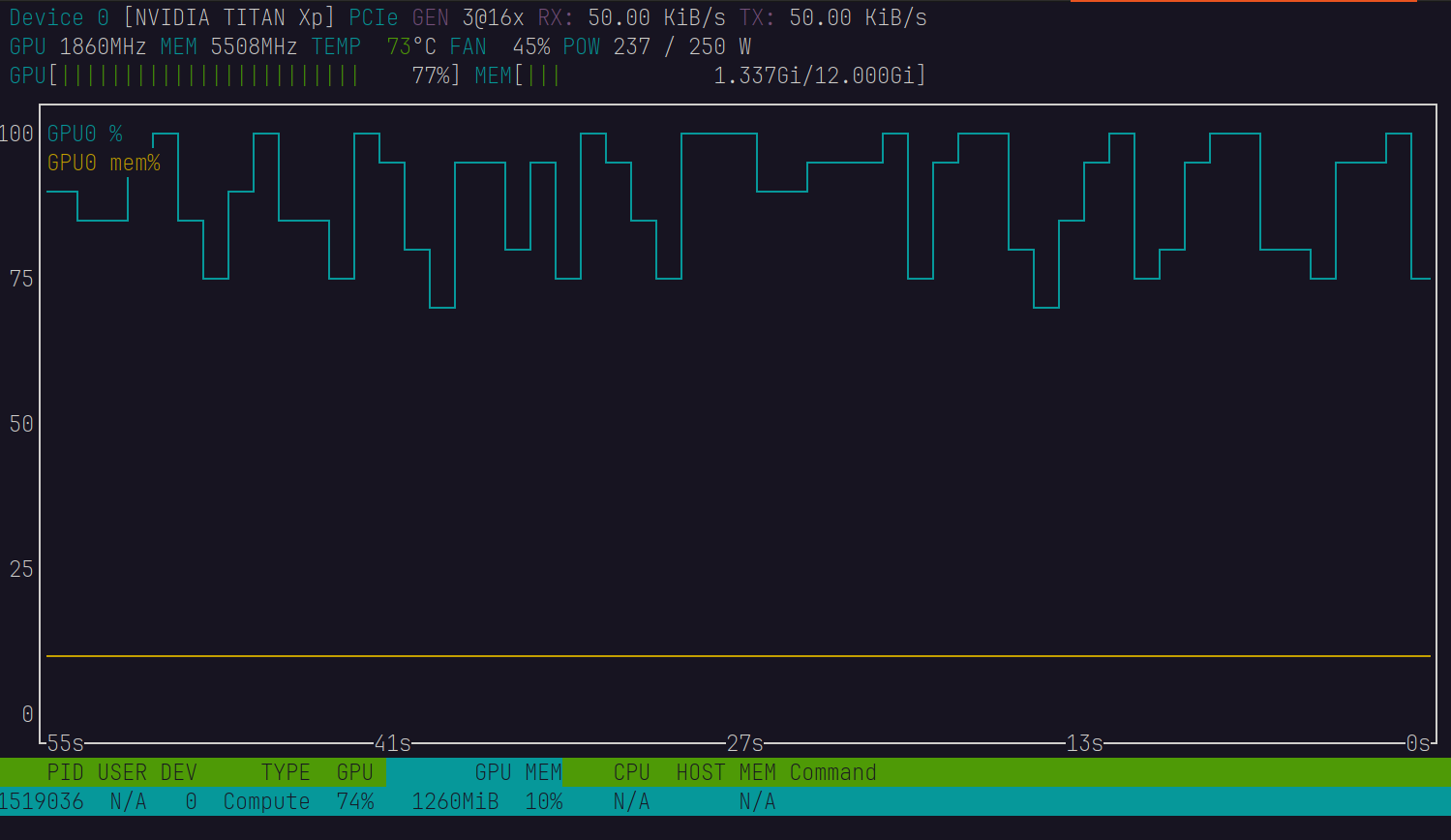

Titan Xp drawing about 250 Watts:

Log for the Titan Xp:

NVIDIA Titan Xp - 50-bit ECDLP Benchmark Results

Date: Fri Nov 7 06:07:01 PM CET 2025

========================================

Run 1: 21s

Run 2: 17s

Run 3: 17s

Run 4: 18s

Run 5: 17s

Run 6: 18s

Run 7: 17s

Run 8: 17s

Run 9: 18s

Run 10: 17s

All tests completed!

Statistics:

===========

Total runs: 10

Total time: 177s (2m 57s)

Mean time: 17.70s

Median time: 17.0s

Min time: 17s (Runs 2, 3, 5, 7, 8, 10)

Max time: 21s (Run 1)

Sorted times: 17, 17, 17, 17, 17, 17, 18, 18, 18, 21

10 GPU-Hour Throughput Estimate:

=================================

10 GPU-hours = 36,000 seconds

Average time per challenge: 17.70s

Estimated challenges in 10 GPU-hours: ~2,033 challenges

Per hour rate: ~203 challenges/hour

Per day rate (24h): ~4,881 challenges/day

Hardware:

=========

GPU: NVIDIA Titan Xp (Pascal, Compute 6.1)

CPU: 4 threads

CUDA: 8.0

Configuration: GPU Grid(60x256), DP size=9

GPU Throughput: ~565-690 MK/s (typical: ~575 MK/s)

NVIDIA Titan Xp - 64-bit ECDLP Benchmark Results

========================================

Run 1: 40s

Run 2: 51s

Run 3: 43s

Run 4: 74s

Run 5: 79s

Run 6: 46s

Run 7: 65s

Run 8: 43s

Run 9: 66s

Run 10: 40s

All tests completed!

Statistics:

===========

Total runs: 10

Total time: 547s (9m 7s)

Mean time: 54.70s

Median time: 48.5s

Min time: 40s (Runs 1, 10)

Max time: 79s (Run 5)

Sorted times: 40, 40, 43, 43, 46, 51, 65, 66, 74, 79

10 GPU-Hour Throughput Estimate:

=================================

10 GPU-hours = 36,000 seconds

Average time per challenge: 54.70s

Estimated challenges in 10 GPU-hours: ~658 challenges

Per hour rate: ~66 challenges/hour

Per day rate (24h): ~1,584 challenges/day

Hardware:

=========

GPU: NVIDIA Titan Xp (Pascal, Compute 6.1)

CPU: 4 threads

CUDA: 8.0

Configuration: GPU Grid(60x256), DP size=9

GPU Throughput: ~565-690 MK/s (typical: ~575 MK/s)

Log for the RTX PRO 6000:

Statistics:

================================================

Total runs: 10

All solved: 10/10 (100% success rate)

Total time: 758 seconds (12m 38s)

Minimum time: 49 seconds

Maximum time: 126 seconds

Mean time: 75.8 seconds

Median time: 75 seconds

Sorted times: 49, 51, 57, 72, 72, 78, 81, 86, 86, 126

Performance Metrics:

================================================

Average GPU throughput: ~3.9 GK/s

Range searched per run: 2^70 (1,180,591,620,717,411,303,424 keys)

Keys per second (avg): 1.56e+19 (2^64.09)

CPU-only is faster for 50-bit problems, slower for 64-bit problems

Key Observations:

1. Huge variance in CPU times: The probabilistic nature of Pollard's Kangaroo shows extreme variance for CPU-only mode:

- Fastest: 104s

- Slowest so far: 625s

- That's a 6x difference between best and worst case!

2. CPU vs GPU comparison (preliminary):

- CPU average so far: ~393s (based on first 4 tests)

- GPU average (from earlier): 54.7s

- GPU is ~7.2x FASTER than CPU for 64-bit problems ✅

3. This confirms the break-even point:

- 50-bit: CPU is 1.54x faster (11.5s vs 17.7s)

- 64-bit: GPU is 7.2x faster (55s vs ~393s)

- The crossover happens somewhere between 50-64 bits

Discussion / TODO #

- code: there are no modifications to the code for different GPUs. this is probably very suboptimal. experiment with varying parameters

- this is probabilistic. factor success probability

- make an automated discovery of what’s the most economic platform to solve this with the live json bidding from vast.ai

- algorithmic: There are improvements by Galbraith, Pollard and Ruprai that bring the runtime down to $(1.661 + o(1))\sqrt{N}$ https://eprint.iacr.org/2010/617.pdf

- how to rent out your GPUs https://cloud.vast.ai/host/setup